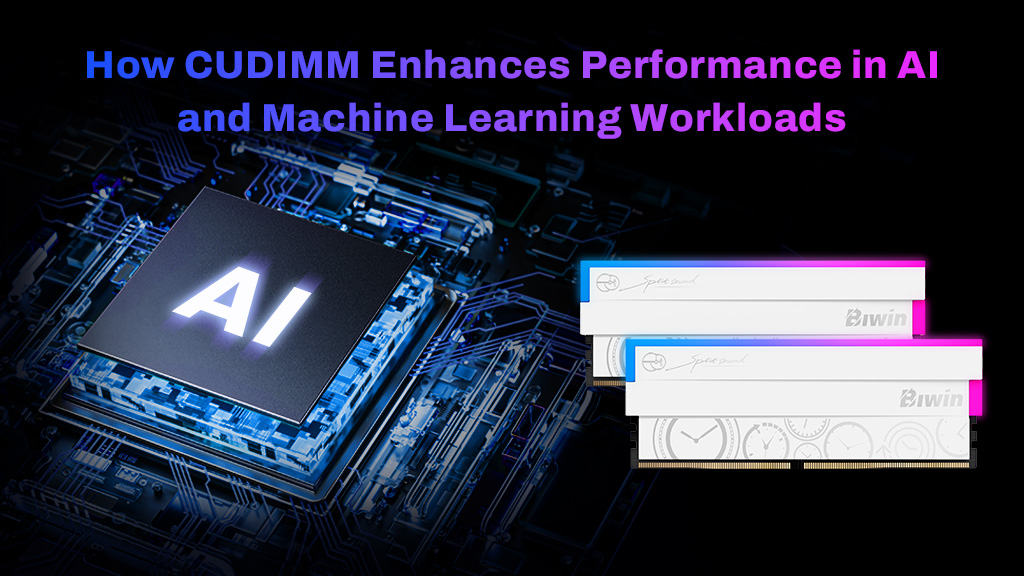

The greatest advance in memory performance in recent years hasn’t come from a new generation, but from a new standard. CUDIMM memory kits include a new component onboard, the Client Clock Driver (CKD) which helps improve stability and maximum frequency. That unlocks much faster memory for compatible systems, enhancing all kinds of workloads, including AI and machine learning.

If you’re looking to build a PC for machine learning or AI workloads in 2025, it’s worth considering CUDIMM for the best possible outcomes.

Table of Contents

ToggleWhat is CUDIMM?

CUDIMM is the latest memory standard that goes beyond what’s possible with more traditional UDIMM DDR5 modules, boosting performance with support for ever faster memory frequencies. Where traditional memory kits reach a performance peak around 6,400 megatransfers per second (MT/s), CUDIMM memory can run at 8,000 MT/s, or even higher, with full support from the latest CPUs.

However, while CUDIMM memory modules will function perfectly well in older Intel 13th and 14th generation systems, and AMD-powered PCs, the only way to get the full functionality from them in early 2025 is with Intel’s Arrow Lake processors. The Core Ultra 200 range includes memory support north of 6,400 MT/s, making it the best platform to experience the fastest possible memory modules. For more information, you could read our another post: What is CUDIMM? Everything you need to know.

How does CUDIMM enhance AI and machine learning performance?

AI model training and inference means handling a lot of data, and often the speed that data can be read is the limiting factor in how fast the model can be trained. Using faster memory thanks to CUDIMM, helps accelerate that process. The additional stability from CUDIMM ensures that there are less data errors, which ultimately leads to cleaner AI training with less chance of inference issues down the line.

Faster memory makes it possible to manage larger and more complicated AI models, too. CUDIMM’s increased bandwidth means fewer bottlenecks for system performance, leading to more efficient and streamlined model training.

As CUDIMM allows memory to perform ever faster in the future, too, we may see the added bandwidth allow for AI and machine learning training in smaller memory quantities, and in more compact devices. That will allow AI development and use in a wider range of hardware, leading to more AI-on-the-edge applications being possible.

CUDIMM modules are designed as the memory of the future, and with added performance and stability, they will help unlock new AI capabilities in the years to come.